# Using package::function() without attaching the package

data_unido <- gsheet::gsheet2tbl("https://docs.google.com/spreadsheets/d/....edit#gid=416085055")4 Data Wrangling

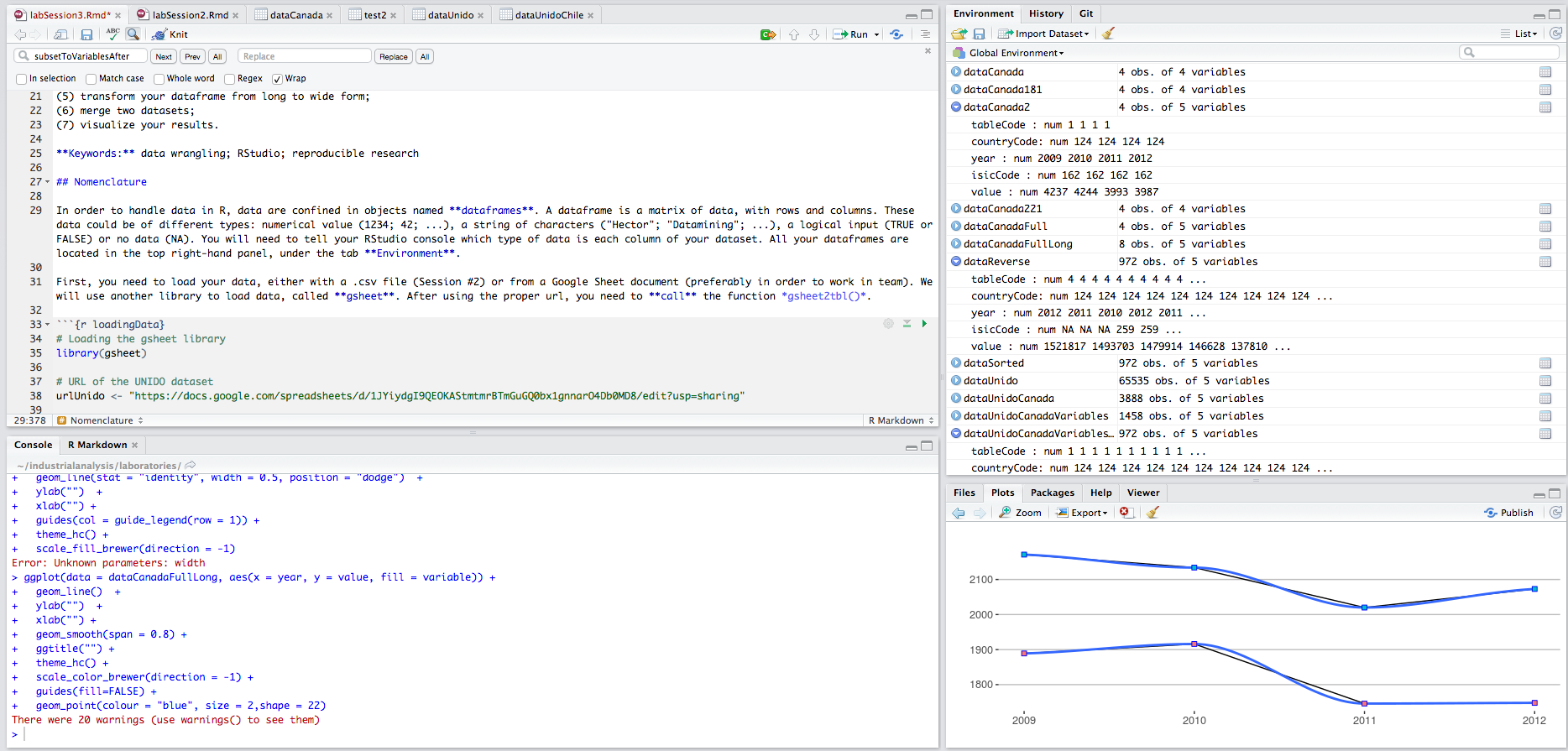

Data wrangling is a crucial step in any data science project, involving collecting, cleaning, transforming, and preparing data for analysis. In a typical data pipeline, raw data must be gathered from various sources and then manipulated into a structured format suitable for visualization or modeling. In this chapter, we focus on using R for effective data wrangling. R is a powerful functional programming language that excels at data manipulation and analysis, making it a popular choice in fields such as biology, medicine, economics, finance, psychology, sociology, and the humanities. Our goal is to harness these tools for business analytics in a reproducible way – meaning that all data operations are documented in code (for example, using RStudio and R Markdown) so that results can be easily reproduced and updated.

Throughout this chapter, we will highlight important tips in bold or italics and demonstrate code examples in R. By the end, you should be comfortable performing common data wrangling tasks in R.

At the end of the chapter, you should be able to:

- Import data from various sources (e.g., CSV files and Google Sheets) into R.

- Understand what a data frame is in R and how to inspect its contents.

- Understand the difference between using

package::function()andlibrary(package)when working with R packages. - Use the pipe operator (

%>%) to write clear, readable sequences of data manipulation steps. - Add new columns to a data frame, rename or delete existing columns.

- Subset rows of a data frame based on conditions (filtering).

- Sort (arrange) data frame rows in ascending or descending order.

- Transform data between long and wide formats (tidy data principles using tidyr functions).

- Merge multiple data frames based on common keys (joining datasets).

- Save your cleaned or transformed data to a file for later use.

We will illustrate these techniques using a real dataset from the United Nations Industrial Development Organization (UNIDO), which contains various industrial statistics for different countries over time. Originally, this database has 655,350 data points, but through wrangling we will distill it down to a small summary for visualization. The concepts and functions covered, however, are applicable to any dataset.

4.1 R as a Functional Language

R is a functional programming language, meaning it is built around functions as the primary way to operate on data. In a functional language, you typically call specific functions (which may internally execute many operations) to accomplish tasks. This approach allows you to perform complex operations with relatively simple and human-readable code. Other examples of popular functional or scripting languages used in data science are Python and Julia.

Functional languages offer significant benefits: they make it straightforward to express complex transformations in just a few lines of code. For instance, with the right functions, you can programmatically collect all patent records worldwide and analyze innovation trends, or gather thousands of epidemiological research articles and identify leading research teams—all tasks that can be done on a personal computer with surprisingly little code. (Of course, such intensive tasks might be slow or require substantial computing time, but they are feasible.) Modern businesses can leverage these tools to transform their operations, turning vast raw datasets into actionable insights. In fact, the availability of powerful open-source tools like R compels businesses to adapt and become more data-driven.

At the same time, using a functional language means you must remember or look up the appropriate functions to use for each task. The R ecosystem provides thousands of functions, both in base R and in contributed packages, to handle nearly any data manipulation or analysis need. This open-source ecosystem is a double-edged sword: it fosters rapid innovation (anyone can create a new package or function and share it), but it also means there can be overlapping or incompatible functions. The community mitigates these issues through documentation, forums like StackOverflow, and continuous package development to fix bugs and improve compatibility.

R, Python, or Julia? Once you learn one high-level language, it becomes easier to learn others. R, Python, and Julia share many concepts and have similar capabilities for data science tasks. R has traditionally been favored in statistics and fields requiring complex data analysis and reporting; Python is often favored in engineering and general software development contexts; Julia is newer but offers speed advantages for heavy computations. In this book we focus on R, but keep in mind that the core data manipulation concepts are transferable across languages.

4.2 The Grammar of R: Packages, Libraries, and Pipes

Before diving into data manipulation in R, it’s important to understand some basic “grammar” of how R code is structured, especially when using add-on packages.

Using Packages and Libraries

The real power of R comes from its packages – collections of functions, data, and documentation that extend R’s capabilities. There are thousands of packages available for everything from data wrangling (e.g., dplyr, tidyr) to visualization (ggplot2) to specialized analyses. In this chapter, we will use several packages, including readr (for reading data), gsheet (for Google Sheets access), dplyr (for data frame manipulation), tidyr (for reshaping data), and tidyverse (which actually bundles many data science packages together).

Attaching a package with library(): To use a package’s functions in your R session, you usually need to attach it by calling library(packageName). For example, to use functions from the dplyr package, you would run: library(dplyr). Once a package is attached, you can call its functions by name without any special prefix.

Using a function with package::function(): Alternatively, you can use a function from a package without attaching the entire package by using the syntax packageName::functionName(). For instance, gsheet::gsheet2tbl() calls the function gsheet2tbl from the gsheet package directly. This approach is convenient when you only need one function from a package or want to avoid name conflicts between functions in different packages. The downside is that you must prepend the package name each time you call the function, which can make code verbose if you use many functions from the same package.

Example – reading from Google Sheets: Below we demonstrate both methods. We use a Google Sheets URL for the UNIDO dataset as an example (more on data importing in the next section):

In the code above, gsheet2tbl() is explicitly called from the gsheet package. Alternatively, we could attach the package first:

# Attaching the package, then calling the function directly

library(gsheet)

data_unido <- gsheet2tbl("https://docs.google.com/spreadsheets/d/....edit#gid=416085055")After running library(gsheet), any function defined in the gsheet package (such as gsheet2tbl) can be used without the gsheet:: prefix. Attaching packages at the start of your script (or R Markdown document) is often a good practice if you will use many functions from those packages, as it keeps the code cleaner and easier to read.

Tip: Many R users include a block at the top of their script or notebook to load all necessary libraries at once. For example:

This ensures all functions you need are available throughout your analysis.

The Pipe Operator %>%

When writing a sequence of data manipulation steps, R’s pipe operator (%>%) from the magrittr package (which is included in the tidyverse) can make code more readable. The pipe passes the output of one function as the input to the next function, allowing you to chain operations in a left-to-right style that often mimics natural language.

Without pipes, you might nest function calls or create intermediate variables for each step. With pipes, you can instead write a clear linear sequence. For example, calling the logarithm function on a variable x normally looks like log(x). Using the pipe, you can rewrite this as:

This statement says “take x, then apply log() to it.” It’s a trivial example, but pipes shine in more complex sequences. Consider using the dplyr function glimpse() to get a quick overview of a dataset (we’ll discuss glimpse() in detail later). We can pipe our data frame into glimpse() as follows:

This code takes the data_unido data frame and pipes it into glimpse(), which then prints an informative summary of the data frame’s structure. Using the pipe here saves us from having to write glimpse(data_unido). More importantly, if we want to perform multiple operations in sequence (filtering, mutating, summarizing, etc.), the pipe allows us to write these steps in a clear order, instead of having to read from the inside out as with nested function calls. We will use %>% frequently in our examples as it is a cornerstone of readable tidyverse code.

Tidy Data Principles

In R (and data science in general), there is a recommended structure for datasets called tidy data. Hadley Wickham, a chief scientist at RStudio, coined the principles of tidy data which make data easier to manipulate and analyze. A dataset is tidy if:

Each variable is in its own column. For example, if you have variables like “Country”, “Year”, and “Population”, each of these should be a separate column in your data frame rather than combined in one column.

Each observation is in its own row. Each row typically represents one observation or record (e.g., a single country-year combination in a country-year dataset).

Each value is in its own cell. A cell is the intersection of a row and a column, and it should hold only a single value (not a list of values or a combination of multiple pieces of information).

Following these rules makes it much easier to perform analysis, because most of R’s functions (especially in the tidyverse) are designed with tidy data in mind. If your data is not tidy, you might need to reshape it (for instance, converting from a “wide” format to a “long” format or vice versa) before analysis. We will practice this when we use tidyr functions like pivot_longer() and pivot_wider() later in this chapter.

Data Frames and Types in R

The primary data structure we will work with is the data frame (or its modern equivalent, the tibble). A data frame is essentially a table of data where each column is a variable and each row is an observation, consistent with the tidy data format described above. Columns in a data frame can be of different types:

- Numeric (numbers, e.g.,

42or3.14) - Character (strings of text, e.g.,

"Canada") - Logical (Boolean values

TRUEorFALSE) - Factors (categorical variables with a fixed set of possible values)

- Others (dates, times, etc., which are usually handled by specialized classes)

When you import data into R, the columns might not always be in the desired type. For example, numbers might be read in as strings (character) due to formatting issues. It’s important to inspect your data and ensure each column is encoded with an appropriate type. You can convert column types using functions like as.numeric(), as.character(), as.factor(), etc.

RStudio’s interface can help you keep track of data frames and their columns. When you load a data frame, it appears in the Environment tab (typically in the upper right panel of RStudio) listing the object’s name, type, and a preview of its contents. If you click on a data frame in the Environment, it will open in a spreadsheet-like viewer for you to browse.

Let’s say we have loaded a data frame called data_unido containing the UNIDO dataset. We can check its structure and contents with a few useful commands:

-

colnames(data_unido)will show all column names. -

dim(data_unido)will show the dimensions (number of rows, number of columns). -

head(data_unido)shows the first six rows;tail(data_unido)shows the last six. -

str(data_unido)or the dplyr alternativeglimpse(data_unido)shows the structure of the data frame, including each column’s name, type, and a preview of values. -

summary(data_unido)gives summary statistics for each column (for numeric columns, min/median/mean/max, etc., and for categorical columns, frequency of each category).

As an example, after loading data_unido, we might want to ensure certain columns are numeric. In the UNIDO dataset, columns like country codes, year, and indicator codes were read in as text. We can convert them to numeric as follows:

# Suppose data_unido is already loaded as a data frame.

# Convert some columns from character to numeric type:

data_unido$tableCode <- as.numeric(data_unido$tableCode)

data_unido$countryCode <- as.numeric(data_unido$countryCode)

data_unido$year <- as.numeric(data_unido$year)

data_unido$isicCode <- as.numeric(data_unido$isicCode)

data_unido$value <- as.numeric(data_unido$value)

# Now check the structure and summary to confirm changes:

str(data_unido)

summary(data_unido)In this code, data_unido$year <- as.numeric(data_unido$year) takes the year column of data_unido, converts it to numeric, and overwrites the original column with the numeric version. We repeat this for other columns that should be numeric. After conversion, summary(data_unido) will show, for example, the minimum and maximum year, which confirms that the year column is numeric and gives an idea of the time range.

Note: If a column contains non-numeric characters (e.g., “N/A”, or mixed text and numbers), using

as.numeric()on it will result inNA(missing values) for those entries and a warning. Always make sure the data is clean or handle such cases (perhaps using functions likeparse_number()from readr which can extract numbers from strings).

Now that we have covered the basics of R’s grammar and how to inspect data, let’s move on to actually getting data into R.

4.3 Importing Data

Real-world data can come in many formats and from many sources. Common sources include local files (like CSVs or Excel spreadsheets), databases, web APIs, and online data repositories. R provides tools to import data from all these sources. In this section, we will focus on two straightforward cases: importing a CSV file and importing data from a Google Sheet. (We will also briefly mention other formats like SPSS/Stata and the use of the data.table package for large data.)

Importing a CSV File

A CSV (Comma-Separated Values) file is a plain text file that stores tabular data. Each line of the file is a row, and columns are separated by commas (or sometimes other delimiters like tabs or semicolons). CSVs are one of the most common formats for sharing data.

To import a CSV file in R, you can use the base R function read.csv(). However, we will use read_csv() from the readr package (part of the tidyverse), which is typically faster and produces tibbles (a modern form of data frame). The usage is similar.

Before reading the file, make sure the file is accessible by R. If you’re working in RStudio, you can upload the file to your RStudio workspace or ensure your working directory is set to where the file is located. In RStudio Server or Cloud, you might upload via the Files pane. For this example, suppose we have a CSV file named gdpCountry.csv located in a folder called data within our project.

Here’s how we load the CSV into a data frame:

After this, gdpCountryData will contain the data from the CSV. In this code:

-

"./data/gdpCountry.csv"is the path to the file../data/means there is a subfolder named “data” in our current working directory, and the file is inside it. - We assign the result of

read_csv()to a variable calledgdpCountryData. This new variable is a data frame that holds all the information from the CSV file. We can now manipulategdpCountryDatain R (filter it, summarize it, etc.).

If the CSV file has a header row (column names) at the top, read_csv will automatically use those as column names. If not, you may need to specify col_names = FALSE and assign names later. In our case, we assume the CSV had headers.

After loading, it’s good to do a quick check:

This will show the first few rows and the dimensions (to see how many rows and columns were read in).

Importing Data from Google Sheets

Another common scenario is collaborating on data via Google Sheets. Rather than exporting the data to CSV manually, R can directly read from a Google Sheet URL (if the sheet is shared appropriately). We will use the gsheet package, which provides a convenient gsheet2tbl() function to read Google Sheets into R.

Setup: First, make sure your Google Sheet is shareable. Open the sheet in your browser, click the Share button, and set it so that “Anyone with the link can view” (or edit, if you want others to collaborate). Copy the sharing URL. It will look something like: https://docs.google.com/spreadsheets/d/1uLaXke-KPN28-ESPPoihk8TiXVWp5xuNGHW7w7yqLCc/edit#gid=...

Notice it contains a long ID string (in this example, 1uLaXke-KPN28-ESPPoihk8TiXVWp5xuNGHW7w7yqLCc). This identifies the document.

Reading the Google Sheet: Use gsheet2tbl() with the sheet’s URL. For example:

# Load the gsheet package

library(gsheet)

# Use gsheet2tbl to read the Google Sheet into a data frame

data_unido <- gsheet::gsheet2tbl("https://docs.google.com/spreadsheets/d/1uLaXke-KPN...LCc/edit#gid=416085055")This will reach out to Google’s servers and pull the spreadsheet data into R as a data frame (actually a tibble, which is essentially the same for our purposes). The object data_unido now holds the UNIDO dataset we will work with. We can inspect it just as we would any imported data:

Often, we may also have a separate sheet (or CSV) that contains metadata, such as variable definitions or codes. In the example above, variablesUnido was mentioned, which likely contains descriptions of each variable in the UNIDO dataset. We could load that as well if needed:

variables_unido <- gsheet2tbl("https://docs.google.com/spreadsheets/d/1JYiydgI9QEO...MD8/edit#gid=919352283")Now variables_unido might contain a lookup table explaining what each code in the dataset means (for instance, that tableCode == 4 corresponds to “Number of Employees”, etc.). This can be useful for reference.

Other Data Formats and Tools

R can handle a variety of data formats:

-

Excel files: Use the readxl package (functions

read_excel(), etc.) or export Excel to CSV and useread_csv. -

Statistical software files (SPSS

.sav, Stata.dta, SAS.sas7bdat): Use the haven package, which providesread_spss(),read_stata(),read_sas(), etc., to import these files. - Databases: Use packages like DBI along with database-specific drivers (RMySQL, RPostgreSQL, etc.) to run SQL queries and import data from databases.

- APIs and Web Data: Packages like httr and jsonlite can be used to retrieve data from web APIs (often returning JSON or XML). Web scraping might involve packages like rvest.

-

Specialized formats: For example, readr has

read_tsv()for tab-separated data, and data.table hasfread()which can auto-detect formats and is extremely fast.

One notable package is data.table. It provides an alternative data frame structure and syntax in R that is highly optimized for speed and memory efficiency (often approaching the speed of comparable tasks in lower-level languages like C++). For very large datasets (millions of rows), data.table::fread() and the data.table syntax can significantly outperform base R or dplyr in reading and manipulating data. However, data.table has its own syntax which is a bit different from the tidyverse style we focus on here. If you are working with huge data and need speed, you might explore data.table, but for this chapter we will stick with tidyverse tools.

Recap – Importing: To summarize, here are the key functions for importing data we’ve seen:

-

read_csv("path/to/file.csv")– Read a CSV file from disk (from readr). -

gsheet2tbl("sheet_url")– Read a Google Sheet via URL (from gsheet). - (Plus mention of others like

read_excel,read_spss, etc., for completeness, but not demonstrated in code here.)

After importing data, the next steps are to explore and clean it. We’ll use the UNIDO data (data_unido) as our working example for the rest of this chapter.

4.4 Exploring and Preparing Data

Once data is loaded into R, the first thing to do is often to explore it: check its dimensions, peek at the first few rows, and get summaries of each column. This helps us verify that the import worked correctly and that we understand the structure of the data. We have already touched on some of the functions for this, but let’s go through a structured example using our data_unido dataset.

Assume data_unido is a data frame with the following columns (based on context from UNIDO data):

-

countryCode: a numeric or character code for countries (e.g., 124 for Canada). -

year: the year of observation (e.g., 2005, 2006, …, 2012). -

isicCode: a code representing an industry (using the ISIC classification, e.g., 131 might be textiles, 181 might be printing). -

tableCode: a code for the indicator being measured (e.g., 1 = number of establishments, 4 = number of employees, etc.). -

value: the value of the indicator (e.g., the number of employees in that industry, country, and year).

There may be other columns (the original data had up to 10 columns including some definitions and source info), but in our examples we will eventually narrow down to these key ones.

Let’s explore data_unido:

# View column names

colnames(data_unido)

# View first 6 rows

head(data_unido)

# View the last 6 rows

tail(data_unido)

# Check the size of the data frame (rows, columns)

dim(data_unido)

# Get a detailed structure of the data frame

glimpse(data_unido) # from dplyr, similar to str(data_unido)

# Or use base R:

str(data_unido)

# Get summary statistics for each column

summary(data_unido)The output of these commands will tell us:

- How many rows and columns (

dim) – we expect something like 655350 rows and 10 columns in the full UNIDO dataset. - The names of the columns (

colnames). - The first few entries (

head) to spot-check what the data looks like (are there headers? Does it seem to be reading correctly?). - The structure (

glimpse/str) – this is very useful as it lists each column with its type and a sample of values. We might see that some columns that should be numeric are currently character, etc. - The summary (

summary) – which for numeric columns will give min, median, mean, max, etc., and for factor/char will either list frequencies or say it’s not meaningful.

From earlier, we already converted some columns to numeric (countryCode, year, etc.). If we had not, glimpse might show them as <chr> (character). Assuming we did convert them, glimpse should show them as <dbl> (numeric type for doubles) now, which is what we want.

It’s common to drop some unnecessary columns at this stage, especially if the dataset has metadata or extra identifiers that we won’t use. For example, the UNIDO dataset had columns like isicCodeCombination, tableDefinitionCode, sourceCode, updateYear, unit which might not be needed for analysis after initial filtering. We can remove columns by assigning them NULL. For instance:

# Remove some unneeded columns to simplify the data frame

data_unido$isicCodeCombinaison <- NULL

data_unido$tableDefinitionCode <- NULL

data_unido$sourceCode <- NULL

data_unido$updateYear <- NULL

data_unido$unit <- NULL

# Verify the columns have been removed

colnames(data_unido)After this, data_unido should have fewer columns. In our case, it would now have columns like countryCode, year, isicCode, tableCode, and value (5 columns remaining). The data frame might be about 65,535 rows × 5 columns at this point (if we’ve filtered some, as we’ll do next). We can confirm with dim(data_unido).

Cleaning the data might also involve renaming columns to something more human-readable. The dplyr function rename() is useful for this. For example, we might rename tableCode to IndicatorCode or even directly to what it represents (if tableCode 4 = “Employees”, maybe we create an Employees column). We’ll see an example of renaming in the exercises.

Now that the data is imported and we have a sense of what’s inside, we can start wrangling the data to answer specific questions or prepare for analysis.

4.5 Manipulating Data Frames

The real work of data wrangling involves transforming the data: adding or removing columns, filtering for certain rows, sorting, combining data from multiple sources, and so on. R provides multiple ways to do these tasks; we will primarily use functions from dplyr for a consistent and readable approach. Recall that we should run library(dplyr) to have these functions available (if not done already).

Adding, Renaming, and Deleting Columns

Adding a new column: To add a new column to a data frame, you can use the assignment operator <- with the data frame and new column name. For example, suppose we want to add a column newColumn to data_unido where every value is 42 (perhaps as a placeholder or dummy variable):

# Add a new column named 'newColumn' with a constant value

data_unido$newColumn <- 42This creates newColumn as a column in data_unido and assigns 42 to every row. We can verify the addition by checking the column names or a slice of the data:

We can also create columns based on existing data. For example, we might scale a column or combine information from multiple columns. Say newColumn should actually be computed as double of value plus 5:

data_unido$newColumn <- data_unido$newColumn * 2 + 5

head(data_unido[,"newColumn"])If originally newColumn was 42 for all rows, now it will be 42 * 2 + 5 = 89 for all rows (since we haven’t varied it by row). This is just a trivial example to illustrate operations on columns.

Renaming columns: If you decide to change the name of a column (maybe newColumn isn’t descriptive), you can use dplyr::rename(). The syntax is rename(dataframe, newName = oldName). For instance:

Now, the column that was newColumn is called newColumnRenamed. We assigned the result back to data_unido (although rename will return the renamed data frame even if you don’t, it’s good practice to explicitly assign or use the pipe as we did).

Deleting columns: To remove a column, assign NULL to it as we showed earlier. For example, to remove newColumnRenamed now:

data_unido$newColumnRenamed <- NULL

colnames(data_unido)This will drop that column entirely from the data frame. (Another way to drop columns is using the select() function in dplyr, but assigning NULL is straightforward for removing single columns by name.)

After these operations, our data_unido is back to having only the original columns we care about. We now have a cleaner dataset with which to work.

Filtering Rows (Subsetting the Data)

Often, we don’t need all the data that we imported. We might be interested in a specific country, a specific time range, or a subset of indicators. Filtering refers to subsetting the rows of the data frame based on some condition.

The dplyr function for filtering is aptly named filter(). You provide it with a data frame and one or more conditions, and it returns only the rows that satisfy those conditions.

Example scenario: Using the UNIDO data, suppose we want to focus on Canada’s data, specifically on two indicators: number of establishments and number of employees, for years after 2009. Concretely:

- Canada is identified by

countryCode == 124(assuming the country codes follow the ISO numeric standard where 124 = Canada). - The indicator for “number of establishments” might be coded as 1, and “number of employees” as 4 (this is inferred from context).

- “After 2009” means

year > 2009(which would include 2010, 2011, 2012 given our dataset goes up to 2012).

We can apply these filters step by step or in one go. First, let’s filter the data for Canada:

Now data_unido_canada contains only rows where countryCode is 124. The dimension might be, say, 3888 rows and 5 columns (just as a reference from the dataset). Next, filter this down to only the desired indicators (tableCode 1 or 4):

Here we used the R logical operator | which means OR. The condition tableCode == 1 | tableCode == 4 keeps rows where the tableCode is either 1 or 4. Now data_unido_canada_vars should contain only the rows for Canada and for those two indicators. We can check the first and last rows to see years and ensure we have both indicators present.

Finally, filter for year > 2009:

This should leave us with data for Canada, indicators 1 and 4, for years 2010, 2011, 2012 (since the original data likely covers 2005–2012). The resulting number of rows might be around 972 (because it’s 3 years × 2 indicators × some number of industries, if each industry is a row; or if each row was an industry-year-indicator combination).

We could have combined all those filters into a single filter() call, like so:

data_unido_canada_post2009 <- filter(data_unido,

countryCode == 124,

(tableCode == 1 | tableCode == 4),

year > 2009)Dplyr filter() allows you to put multiple conditions separated by commas, which are treated as AND (all must be true). The above is equivalent to the step-by-step approach but does it in one shot.

At this point, we have drastically pared down the dataset to a manageable subset. This is often necessary before reshaping or plotting, because the full dataset might be too large or not relevant to the question at hand. In our case, going from 655k points to a few hundred or a thousand is useful for illustration and perhaps for focusing on a particular story (like how the number of establishments and employees in Canada changed post-2009).

The example above filtered by a specific country and indicators. You can filter by any condition needed:

- A range of years (e.g.,

year >= 2009 & year <= 2012for between 2009 and 2012 inclusive). - A set of countries (using

%in%, e.g.,countryCode %in% c(124, 840, 392)for Canada, USA, Japan if those are the codes). - Excluding missing values (

!is.na(column)). - Etc.

Logical operators recap: In R, & means AND, | means OR, and ! means NOT. When combining multiple conditions in filter(), commas act like AND. So filter(df, cond1, cond2) is the same as filter(df, cond1 & cond2).

Now that we have filtered data, let’s look at sorting.

Sorting Data

Sorting (ordering) the data can help in viewing it or preparing it for output. For example, we might want to sort the filtered Canadian data by the value column to see which industry-year had the highest number of employees or establishments.

The dplyr function for sorting is arrange(). By default, arrange(data, columnName) will sort the data frame by that column in ascending order (smallest to largest). For descending order, you wrap the column in desc(). You can also sort by multiple columns (it will sort by the first, and break ties by the second, and so on).

Using our data_unido_canada_post2009 example (which has columns countryCode, year, isicCode, tableCode, value):

- If we want to sort by

valueascending:

- If we want the largest values first (descending order):

Comparing head(data_sorted) and head(data_reverse) would show that the first rows of one are the smallest values and of the other are the largest values. Sorting can be useful before saving data to a file or just to quickly identify extremes.

We could also arrange by multiple keys. For example, maybe we want data sorted by year, then by value within each year:

This would sort the data by year ascending, and for the same year, sort by value descending (so the largest value in each year comes first for that year).

At this point, we’ve covered how to get a subset of the data that we need and how to sort it. Next, we’ll discuss reshaping the data between long and wide formats, which is often needed to meet the “tidy data” requirements or to prepare for certain analyses or visualizations.

4.6 Reshaping Data: Long vs Wide Format

Data can be structured in different ways. Two common structures are wide format and long format:

- Wide format: Each distinct variable has its own column, and each row might represent an entity with multiple observations across those columns. For example, a wide format could have one row per country, and separate columns for each year’s GDP.

- Long format: There is typically one column that holds values and another column that indicates what kind of value it is (with each observation occupying one row). In long format, you might have one row per country-year, and a column indicating which year it is and another column for the GDP value.

Tidy data, as discussed, often corresponds to a long format: each observation is one row. However, data is often recorded or presented in wide format. We need to be able to convert between these formats. In R, the tidyr package provides the functions pivot_wider() and pivot_longer() to do this transformation (these replace the older spread() and gather() functions).

From Long to Wide

Let’s use our filtered Canadian data as an example. Currently, data_unido_canada_post2009 is in a long format: each row is an observation (with country, year, indicator code, and value). Suppose we want to create a wide format where we have separate columns for each industry’s value. For example, one column for the textile sector (ISIC 131) employees, and one for the printing sector (ISIC 181) employees, for each year.

Why might we want to do this? Perhaps to compare those two industries side by side over years for Canada. A wide format could have:

- Columns: year, tableCode (indicator), countryCode, and then separate columns for each selected

isicCode(131, 181). - Rows: each row might correspond to a unique combination of year, tableCode, countryCode (in our filtered data, countryCode is constant 124 for Canada, tableCode might be 1 or 4 depending on if we included both employees and establishments – we did).

- The cells in the new industry-specific columns would be the

valueof that indicator for that industry.

Our filtered data data_unido_canada_post2009 currently includes two tableCodes (1 and 4). To keep it simple, let’s further filter to just one indicator, say tableCode 4 (number of employees), before widening, because mixing two different indicators in a single wide table can be confusing (they would become separate rows anyway if tableCode remains a column). Alternatively, we could include tableCode as part of the key so that employees and establishments don’t mix.

For demonstration, assume we want to compare two industries: ISIC 131 vs ISIC 181 (textiles vs printing) for the number of employees (tableCode 4) in Canada, years 2010–2012. We can create two data subsets and then merge, or directly pivot. Let’s try pivoting directly on the dataset that contains both industries.

We will use pivot_wider(). Its key arguments are:

-

names_from: the column whose values will become new column names. -

values_from: the column whose values will fill those new columns.

In our case:

-

names_from = isicCode(because we want a column for each distinct isicCode). -

values_from = value(because those are the numbers we want in the cells).

We should ensure that the combination of the other columns (year, countryCode, tableCode) is unique for each isicCode in the data we pivot. In our dataset, for each year and tableCode, there might be multiple industries. When we pivot wider, each row of the wide table will correspond to one combination of the non-pivoted columns.

Let’s do it:

After this pivot:

- The new

wide_datashould have columns: year, countryCode, tableCode, and then one column for each unique isicCode present indata_unido_canada_post2009(in our filtered case, it might be many industries unless we filtered to just a couple of ISIC codes; let’s assume we only kept a couple for simplicity). If we hadn’t filtered, it could be a lot of columns (the example given said 165 columns, which suggests 163 industry codes plus year and a couple other keys). - Each row of

wide_datarepresents a single year-country-indicator combination. In our case, country is fixed as Canada (124) and indicator could be 1 or 4 if we left both. If both 1 and 4 are present, then for each year we would have two rows (one for tableCode 1, one for 4). - For each such row, the value for a particular industry code will appear in that industry’s column, and presumably the other industry columns will be NA for that row (because a given row is one observation of one indicator in one industry).

The dimension output (dim(wide_data)) might show, for example, 6 rows and (some number of columns). The original text said 6 lines and 165 columns for a similar operation, which likely means they had 6 rows (maybe 3 years × 2 indicators?) and 165 columns (for each industry code present).

Our wide format is not tidy (because each industry’s values are split into separate columns), but sometimes wide format is needed, for instance, for certain types of analysis or simply for readability or exporting to another program.

From Wide to Long

Now, suppose we have a wide format data frame (like wide_data we just created) and we want to go back to a long format (tidy format). We use pivot_longer() for this. The arguments for pivot_longer() are essentially the opposite of pivot_wider():

- We need to specify which columns to gather into key-value pairs. Often, it’s easier to specify which columns not to pivot (like the ID or key columns that should remain as is). We can use the special syntax

!c(col1, col2, ...)to indicate “all columns except these”. - We specify

names_tofor the name of the new column that will contain what was formerly column names (e.g., “isicCode”), andvalues_tofor the new column that will contain the values from those columns.

Continuing our example, to pivot wide_data back to long format:

long_data <- wide_data %>%

pivot_longer(

cols = !c(year, countryCode, tableCode), # all columns except these key columns

names_to = "isicCode",

values_to = "value"

)

dim(long_data)

head(long_data)A couple of things to note:

- We used

!c(year, countryCode, tableCode)incols. The exclamation mark!in front ofc(...)means “not these columns”. So we are tellingpivot_longerto gather all columns except year, countryCode, and tableCode. These excepted columns will remain as they are (they are the identifier columns that stay in each row). - The result will have a column named

isicCode(as we specified) and a column namedvalue. Every row oflong_datais now a single observation: it has a year, a countryCode, a tableCode, an isicCode, and a value. In fact, if we did everything correctly,long_datashould look identical to our originaldata_unido_canada_post2009(except maybe the order of rows might differ, and the type ofisicCodemight be character now because it came from column names, but we could convert it back to numeric).

The dimension (dim(long_data)) should match the original number of filtered observations we had before widening. The head(long_data) will show a few rows and we expect to see columns for year, countryCode, tableCode, isicCode, value.

Important: For creating plots and running most analyses in R, the long format is usually required. For example, ggplot2 (for visualization) expects data in long format: each row is one observation. If your data is in wide format, you often need to convert it to long before using such tools.

So, why do we ever use wide format? Wide format can be useful for:

- Presenting data in tables (reports, spreadsheets) where each column is an easy-to-understand category.

- Performing certain operations that are easier in wide form (e.g., matrix operations, or calculating differences between two specific columns).

- Exporting to formats or software that expect wide data (Excel users often prefer wide format tables).

In R, though, analysis tends to favor long format, and we will proceed with data in long format for the remainder of the chapter.

We have now covered how to reshape data, which is a key part of wrangling when dealing with multiple variables that could be structured in different ways.

4.7 Merging Datasets

Data often comes split across multiple tables. For example, you might have one table with economic indicators and another with geographic information; or, as our earlier anecdote suggested, you might have a customer information table and another table with customer transactions. Combining data from different sources is another fundamental operation in data wrangling.

R provides join functions to merge data frames. The most common is left_join() from dplyr, which merges two data frames based on a common key, keeping all observations from the “left” data frame and adding matching information from the “right” data frame.

Before we demonstrate merging in R, let’s motivate it with an example scenario: Imagine you have two hacked datasets (to borrow the chapter’s playful scenario): one from a bank containing customer account details, and another from a credit bureau containing social security numbers. By joining them on a common identifier (like name or SSN), you could suddenly have a much richer dataset about each customer. This is exactly what companies do legitimately when they integrate data from different departments or partner organizations: merging datasets gives new insights by combining information.

In our case, we will merge subsets of the UNIDO data. Let’s create two small data frames from data_unido:

-

data_canada_131: Data for Canada (countryCode == 124), for the textile sector (isicCode == 131), for the number of employees (tableCode == 4), and for years after 2008. -

data_canada_181: Similar, but for the printing sector (isicCode == 181).

These two data frames represent two different industries in Canada. Each one, if filtered to tableCode 4 and after 2008, should have data for years 2009–2012 (assuming data exists for those years) on number of employees. Our goal is to merge these so that we can directly compare the values side by side.

First, let’s obtain these datasets (we assume data_unido is still our full dataset loaded from GSheet but possibly with extraneous columns removed and types set):

# Ensure dplyr and tidyr are loaded

library(dplyr)

library(tidyr)

# Create first dataset: Canada, ISIC 131 (textiles), employees (tableCode 4), year > 2008

data_canada_131 <- data_unido %>%

filter(countryCode == 124, isicCode == 131, tableCode == 4, year > 2008) %>%

pivot_wider(names_from = isicCode, values_from = value)

head(data_canada_131)

# Create second dataset: Canada, ISIC 181 (printing), employees (tableCode 4), year > 2008

data_canada_181 <- data_unido %>%

filter(countryCode == 124, isicCode == 181, tableCode == 4, year > 2008) %>%

pivot_wider(names_from = isicCode, values_from = value)

head(data_canada_181)A few things to note in this code:

- We applied the filters inside the pipe for clarity.

data_canada_131now should contain only data where countryCode is 124, isicCode 131, etc. After filtering, we usedpivot_wider. Since in each of those filtered sets there’s only oneisicCodevalue, pivot_wider will essentially turn thevaluecolumn into a column named “131” (or “181” for the second dataset). It’s a way to prepare for the join: each dataset now has a column with the industry code as the name and the employee count as the value. - The key columns that remain in each (besides the new “131” or “181” column) should be year, countryCode, and tableCode. For our filtered data, countryCode is constant (124) and tableCode is constant (4) within each data frame, but they are still present as columns. Year will vary (2009, 2010, 2011, 2012 for instance).

- We could have dropped countryCode and tableCode since they are constant (for these particular subsets), but it won’t hurt to keep them as keys for the merge.

Now, to merge data_canada_131 and data_canada_181, we need to have common key columns. By inspecting, both should have year, countryCode, and tableCode. Those make sense as keys: we want to align the data by year (and it’s the same country and indicator in both). We will use left_join to combine them:

In left_join(X, Y, by = c("col1","col2",...)), the first argument X is the left table (in our case data_canada_131), the second argument Y is the right table (data_canada_181), and by specifies the columns that should match between the two. We used the vector of column names c("year","countryCode","tableCode") as the key. We could also have left out by and, if the column names are identical in both data frames, left_join would guess them; but it’s clearer to be explicit.

The result data_canada_full will have:

- All rows from

data_canada_131(the left table). That means all years after 2008 that had textile (131) data. - It will add the matching columns from

data_canada_181where the year, country, and tableCode match. - If there was a year present in

data_canada_131that isn’t indata_canada_181, the new columns fromdata_canada_181(i.e., the “181” column) will be NA for that year. Ifdata_canada_181had an extra year that 131 didn’t, those would not appear because left_join keeps only rows from the left side. (An alternative would befull_jointo keep all, orinner_jointo keep only matching rows; but we chose left_join as a common approach.)

Given our filtering, both likely cover 2009–2012, so they should align perfectly, and data_canada_full should have one row per year (2009, 2010, 2011, 2012) for Canada, with tableCode 4, and two additional columns: one named “131” and one named “181”, containing the employee counts in those industries. We can confirm by looking at head(data_canada_full) (though that will show all since there are only a few rows).

Now, data_canada_full is in a wide format (year, countryCode, tableCode, 131, 181). If we want to get it back to a tidy long format (e.g., for plotting), we can pivot longer:

data_canada_full_long <- data_canada_full %>%

pivot_longer(

cols = !c(year, countryCode, tableCode),

names_to = "isicCode",

values_to = "value"

)

head(data_canada_full_long)After this, data_canada_full_long will have columns: year, countryCode, tableCode, isicCode, value, and each row will be a single observation (e.g., Canada, 2010, tableCode 4, isicCode 131, value X; then Canada, 2010, tableCode 4, isicCode 181, value Y; etc.). Essentially, we’re back to a long format where the two industries’ data are stacked, but this time we only have those two industries in the dataset.

This data_canada_full_long is now ready for further analysis or visualization (for instance, we could create a plot of employees in Textile vs Printing in Canada over 2009–2012).

Finally, if we want to save this prepared dataset to a file (to perhaps use in a visualization chapter or share), we can write it to a CSV:

This will create a file dataCanadaFullLong.csv in your working directory (or specified path) containing the year, countryCode, tableCode, isicCode, and value columns for the two industries we merged. We now have a clean, merged dataset that came from two different subsets originally.

4.8 Saving Your Data

It’s worth noting that after wrangling data, you often want to save the clean or transformed version for later use. We just saw an example of using write_csv() from readr to save a data frame to a CSV file. The base R alternative is write.csv(), but write_csv is a bit faster and doesn’t add row numbers by default. The usage is straightforward: give it the data frame and the desired file path.

Other functions for exporting:

-

write_rds()(from readr) can save an R object in a binary RDS format, which preserves data types and is often more compact; you load it back withread_rds(). - If collaborating with Excel users, the writexl or openxlsx packages can write

.xlsxfiles. - For databases, you’d use DBI functions to append or copy data into database tables.

For now, saving to CSV is a simple and effective way to export the results of our wrangling.

4.9 Summary

In this chapter, we covered the essential steps of data wrangling in R:

- We learned about R’s approach to functions and packages, and how to use

library()andpackage::function()to access the tools we need. - The pipe operator

%>%was introduced as a way to make code more readable by chaining operations. - We reviewed the concept of tidy data (each variable in a column, each observation in a row, each value in a cell) and saw how this principle guides data structuring in R.

- We practiced importing data from CSV files and Google Sheets into R data frames, and discussed other formats briefly.

- Using a real dataset, we explored how to inspect data frames (viewing columns, sizes, and summaries) and ensure the data types are correct, converting types when necessary.

- We added new columns to a data frame, renamed and removed columns, using base R and dplyr approaches.

- We filtered rows based on conditions with

filter(), allowing us to focus on subsets of the data. - We sorted data frames with

arrange()to order the observations. - We reshaped data between long and wide formats using

pivot_wider()andpivot_longer(), and reinforced why long format (tidy format) is often preferred for analysis. - We merged data from two different subsets using

left_join(), demonstrating how to align data on keys and combine it into one data frame. - Finally, we showed how to save the cleaned/wrangled data to a CSV file for future use.

Below is a table of some key R functions we encountered and their purposes:

| Function | Purpose |

|---|---|

read_csv() |

Read data from a CSV file into a data frame (tibble). (From readr) |

gsheet2tbl() |

Read data from a Google Sheets URL into a data frame. (From gsheet) |

head() / tail()

|

Display the first / last rows of a data frame (default 6 rows). |

colnames() |

Show or set the column names of a data frame (or matrix). |

dim() |

Show the dimensions (number of rows and columns) of a data frame. |

str() / glimpse()

|

Display the structure of a data frame (columns types and examples). glimpse is from dplyr and is similar to str but formatted nicely for tibbles. |

summary() |

Produce summary statistics of each column in a data frame. |

as.numeric() / as.character() / as.factor()

|

Convert a vector (or data frame column) to numeric, character, or factor type, respectively. |

$ (dollar sign) |

Extract or create a column in a data frame (df$col), also used to assign to a column (df$newcol <- ...). |

library() |

Attach an R package, making all its functions available by name. |

%>% (pipe) |

Pass the output of one expression as the input to the next, enabling chaining of commands. (From magrittr/dplyr) |

filter() |

Subset rows of a data frame based on condition(s). (From dplyr) |

rename() |

Rename columns in a data frame. (From dplyr) |

arrange() |

Sort a data frame’s rows by the values of one or more columns. (From dplyr) |

desc() |

Helper function to sort in descending order (used inside arrange). (From dplyr) |

pivot_wider() |

Convert data from long to wide format, creating new columns for levels of a key variable. (From tidyr) |

pivot_longer() |

Convert data from wide to long format, gathering columns into key-value pairs. (From tidyr) |

left_join() |

Merge two data frames, keeping all rows from the left and adding matching data from the right. (From dplyr) |

write_csv() |

Write a data frame to a CSV file. (From readr) |

With these tools, you can accomplish a vast array of data cleaning and transformation tasks. Data wrangling often requires creativity and problem-solving, as every dataset can have its own quirks. The functions above are like a toolkit—you will combine them in different ways depending on what the data looks like and what form you need it to be in for your analysis.

In the next chapter, we will build on this foundation and explore data visualization, where we take our tidy, wrangled data and create insightful graphs.

4.10 Exercises: Practice Your Data Wrangling Skills

It’s time to get your hands dirty and practice the concepts from this chapter. In this exercise, we will work step-by-step through a data wrangling task using a sample dataset. The exercise is broken down into parts, and by the end you will have applied importing, filtering, reshaping, and joining on the data.

For these exercises, assume we have:

- A CSV file containing GDP data (

chapter6data.csv). - A Google Sheet containing geographical coordinates (

locationsdata). - We will combine these to get a final dataset.

Step 1: Import a CSV file Import the CSV file called chapter6data.csv (which contains GDP data for various countries and years) into R and store it in a data frame gdp. Use readr::read_csv() to do this.

-

Import the CSV file:

(Fill in the path or ensure your working directory is set so that the file can be found.)

Step 2: Import a Google Sheet Import a dataset containing longitude and latitude for countries from the Google Sheet available at the given URL. Store this in a data frame locations. Use the gsheet2tbl() function for this.

-

Import the Google Sheet:

library(gsheet) locations <- gsheet2tbl("https://docs.google.com/spreadsheets/d/1nehKEBKTQx11LZuo5ZJFKTVS0p5y1ysMPSOSX_m8dS8/edit?usp=sharing")Make sure the Google Sheet is shared publicly (or at least accessible via the link). The

locationsdata might have columns like Country, Longitude, Latitude.

Step 3: Delete an unnecessary column The gdp data frame may have an extra index column (for example, sometimes CSVs exported from certain systems include an unnamed index as the first column, which might appear as X1 in R). Remove the column named X1 from the gdp data frame if it exists.

-

Remove the

X1column fromgdp:gdp$X1 <- NULLConfirm that the column is gone by checking

colnames(gdp).

Step 4: Filter the data We only want to focus on a few countries in this analysis. Filter the gdp data to keep only the following countries: United States, Canada, Japan, Belgium, and France.

-

Filter

gdpfor specific countries:Here we assume the GDP data frame has a column named

country. We used the%in%operator to match a set of values. The resultgdp2contains data only for those five countries.

Step 5: Reshape data from wide to long Suppose the gdp2 data is in a wide format, where each year might be a separate column (e.g., columns for 2015, 2016, 2017, etc., containing GDP values). We want to convert this to a long format with three columns: “country”, “year”, and “gdp”. Use pivot_longer() to gather the year columns.

-

Convert

gdp2to long format:library(tidyr) gdp3 <- gdp2 %>% pivot_longer( cols = -country, # all columns except 'country' names_to = "year", values_to = "gdp" )In this code, we assume

gdp2has a column namedcountryand other columns that are year names (like “2015”, “2016”, etc.). The-countryincolsmeans we take all columns except country (thus all the year columns) to pivot into long format. After this,gdp3will have three columns: country, year, and gdp, where each row is the GDP of a country in a particular year.

Step 6: Merge the datasets Now we have gdp3 (country-year-GDP data) and locations (country-longitude-latitude data). We want to combine these so that for each country and year, we also have the location information. The locations data likely has one row per country (since coordinates don’t change by year). We will join locations to gdp3 by the country column.

-

Join

locationswithgdp3:gdp4 <- left_join(gdp3, locations, by = "country")This will add the longitude and latitude columns from

locationsto thegdp3data frame, matching on the country name. We useleft_joinwithgdp3as the left table so that all country-year combinations remain, and we bring in the coordinates for those countries. If a country ingdp3wasn’t found inlocations, the coordinates would be NA (but since we filtered to specific countries that hopefully are in the locations data, we should get matches for all).

After completing these steps, gdp4 should contain the country, year, GDP, and location (longitude, latitude) for the five selected countries. This dataset is now ready for any geographical visualization or further analysis you might want to do (for example, mapping GDP values on a world map, etc.).

Make sure to test each step and inspect the results using functions like head(), dim(), and summary() to ensure the transformations are correct. Happy wrangling!