This course will teach you how to create a wordcloud. You will find a glimpse of the technique we used to manipulate our tweets on AI. We used the methodology developed by Silge and Robinson (2016) in their book “Text Mining with R”.

Loading the data

First, let’s load the csv files containing the tweetsAI on AI. We collected 10,000 tweetsAI on Artificial Intelligence between 11 and 12 November 2019 with two specific keywords: “ArtificialIntelligence” and “Artificial Intelligence”.

Merging the data

The second step is to merge the two datasets into one.

Cleaning the data

After merging the data, it needs to be a little cleaned up. We want to keep unique tweetsAI but we collected the tweetsAI at different time which means that we potentially have the same tweetsAI collected at different time. This results in a change in the likes count, retweetsAI count and replies count. Then the unique() function will not remove the tweet even if it’s the same because the count is not the same. Therefore we need to remove these columns and apply the unique() function.

Now, we want to filter the data to keep only the tweetsAI of November 11, 2019. That leaves us with about 6,000 tweetsAI.

# Filtering the tweetsAI

tweetsAI <- filter(tweetsAI, date == "2019-11-11")

As we have the tweetsAI over a one-day period, now we can only keep the column tweet (containing the actual tweet of a person).

text <- tweetsAI$tweet

Then, we need to clean up the text (tweet) by removing retweet entities, at people, punctuation, numbers, html links and “pictwitter”.

# remove retweet entities

text <- gsub("(RT|via)((?:\\b\\W*@\\w+)+)", "", text)

# remove at people

text <- gsub("@\\w+", "", text)

# remove punctuation

text <- gsub("[[:punct:]]", "", text)

# remove numbers

text <- gsub("[[:digit:]]", "", text)

# remove html links

text <- gsub("http\\w+", "", text)

# remove all pictwitter

text <- gsub("pictwitter\\w+ *", "", text)

Tidying the data

In order to turn the text (tweet) into a tidy text dataset, we first need to put it into a data frame.

The unnest_tokens function

Within our tidy text framework, we need to both break the text into individual tokens (a process called tokenization) and transform it to a tidy data structure. To do this, we use tidytext’s unnest_tokens() function.

library(tidytext)

tidy_tweetsAI <- text_df %>%

unnest_tokens(word, text)

Removing stop words

Now that the data is in one-word-per-row format, we will want to remove stop words; stop words are words that are not useful for an analysis, typically extremely common words such as “the”, “of”, “to”, and so forth in English. We also need to create a custom stop-words list using bind_rows() to add the keywords used to download the tweets. Therefore, let’s remove words such as “artificial”, “intelligence”, “artificialintelligence” and “ai”.

custom_stop_words <- bind_rows(tibble(word = c("artificial", "intelligence", "artificialintelligence", "ai"),

lexicon = c("custom")),

stop_words)

custom_stop_words

# A tibble: 1,153 x 2

word lexicon

<chr> <chr>

1 artificial custom

2 intelligence custom

3 artificialintelligence custom

4 ai custom

5 a SMART

6 a's SMART

7 able SMART

8 about SMART

9 above SMART

10 according SMART

# … with 1,143 more rowsNow, let’s remove the custom stop words (kept in the tidytext dataset custom_stop_words) with the anti_join() function from the dplyr package.

tidy_tweetsAI <- tidy_tweetsAI %>%

anti_join(custom_stop_words)

Word frequencies

Then, we need to find the most common words in all the tweets by using dplyr’s count() function.

tidy_tweetsAI <- tidy_tweetsAI %>%

count(word)

Of course, you can do all these steps at once.

tidy_tweetsAI <- text_df %>%

unnest_tokens(word, text) %>%

anti_join(custom_stop_words) %>%

count(word)

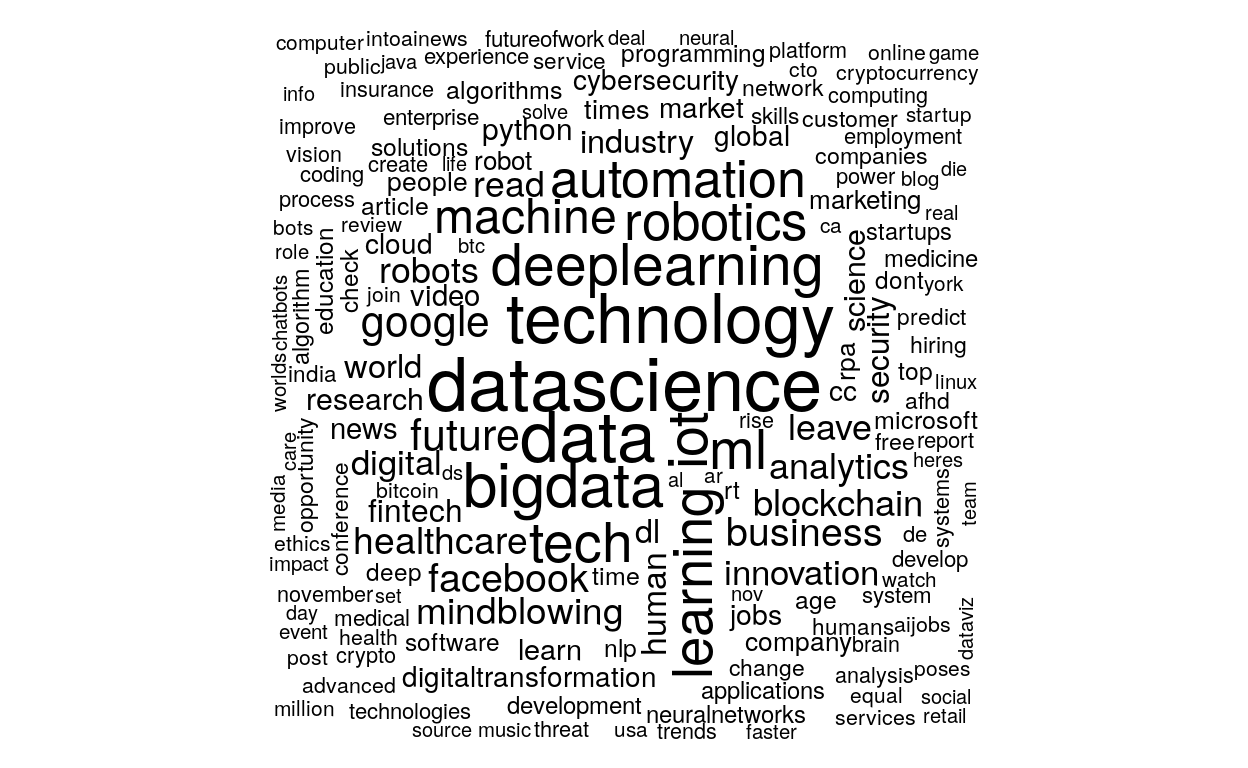

Wordclouds

As we have a tidy text format, we can make a wordcloud to see what people say about AI.

library(wordcloud)

tidy_tweetsAI %>%

with(wordcloud(word, n, max.words = 200, random.order = FALSE, rot.per = 0.15))